The Brain’s Picture of the

External World

Chapter 22 (excerpt) from In Search of Memory: The Emergence of a New Science of Mind, Eric R. Kandel.

The reason that the brain can derive meaning from, say, a limited analysis of a visual scene is that the visual system does not simply record a scene passively, as a camera does. Rather, perception is creative: the visual system transforms the two-dimensional patterns of light on the retina of the eye into a logically coherent and stable interpretation of a three-dimensional sensory world. Built into neural pathways of the brain are complex rules of guessing; those rules allow the brain to extract information from relatively impoverished patterns of incoming neural signals and turn it into a meaningful image. The brain is thus the ambiguity-resolving machine par excellence!

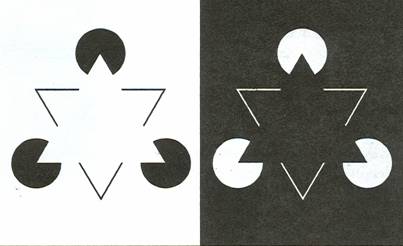

Cognitive psychologists demonstrated this ability with studies of illusions, that is, misreadings of visual information by the brain. For example, an image that does not contain the complete outline of a triangle is nevertheless seen as a triangle because the brain expects to form certain images (figure 22-1). The brain's expectations are built into the anatomical and functional organization of the visual pathways; they are derived in part from experience but in large part from the innate neural wiring for vision.

22-1. The brain’s

reconstruction of sensory information.

The brain resolves ambiguities by creating shapes from incomplete data—for

example, filling in the missing lines of these triangles. If you hide parts of

these pictures, your brain is deprived of some clues it uses to form

conclusions and the triangles vanish.

To appreciate these evolved perceptual skills, it is useful to compare the computational abilities of the brain with those of artificial computational or information-processing devices. When you sit at a sidewalk cafe and watch people go by, you can, with minimal clues, readily distinguish men from women, friends from strangers. Perceiving and recognizing objects and people seem effortless. However, computer scientists have learned from constructing intelligent machines that these perceptual discriminations require computations that no computer can begin to approach. Merely recognizing a person is an amazing computational achievement. All of our perceptions-seeing, hearing, smelling, and touching-are analytical triumphs.

The second assumption developed by cognitive psychologists was that the brain achieves these analytic triumphs by developing an internal representation of the external world-a cognitive map--and then using it to generate a meaningful image of what is out there to see and to hear. The cognitive map is then combined with information about past events and is modulated by attention. Finally, the sensory representations are used to organize and orchestrate purposeful action.

The idea of a cognitive map proved an important advance in the study of behavior and brought cognitive psychology and psychoanalysis closer together. It also provided a view of mind that was much broader and more interesting than that of the behaviorists. But the concept was not without problems. The biggest problem was the fact that the internal representations inferred by cognitive psychologists were only sophisticated guesses; they could not be examined directly and thus were not readily accessible to objective analysis. To see the internal representations-to peer into the black box of mind-cognitive psychologists had to join forces with biologists.

Fortunately, at the same time cognitive psychology was emerging in the 1960s, the biology of higher brain function was maturing. During the 1970s and 1980s, behaviorists and cognitive psychologists began collaborating with brain scientists. As a result, neural science, the biological science concerned with brain processes, began to merge with behaviorist and cognitive psychology, the sciences concerned with mental processes. The synthesis that emerged from these interactions gave rise to the field of cognitive neural science, which focused on the biology of internal representations and drew heavily on two lines of inquiry: the electrophysiological study of how sensory information is represented in the brains of animals, and the imaging of sensory and other complex internal representations in the brains of intact, behaving human beings.

Both of these approaches were used to examine the internal representation of space, which I wanted to study, and they revealed that space is indeed the most complex of sensory representations. To make any sense of it, I needed first to take stock of what had already been learned from the study of simpler representations. Fortunately for me, the major contributors to this field were Wade Marshall, Vernon Mountcastle, David Hubel, and Torsten Wiesel, four people I knew very well, and whose work I was intimately familiar with.

The electrophysiological study of sensory representation was

initiated by my mentor, Wade Marshall, the first person to study how touch,

vision, and hearing were represented in the cerebral cortex.

These physiological studies revealed two principles regarding sensory maps. First, in both people and monkeys, each part of the body is represented in a systematic way in the cortex. Second, sensory maps are not simply a direct replica in the brain of the topography of body surface. Rather, they are a dramatic distortion of the body form. Each part of the body is represented in proportion to its importance in sensory perception, not to its size. Thus the fingertips and the mouth, which are extremely sensitive regions for touch perception, have a proportionately larger representation than does the skin of the back, which although more extensive is less sensitive to touch. This distortion reflects the density of sensory innervation in different areas of the body. Woolsey later found similar distortions in other experimental animals; in rabbits, for example, the face and nose have the largest representation in the brain because they are the primary means through which the animal explores its environment. As we have seen, these maps can be modified by experience.

In the early 1950s Vernon Mountcastle at Johns Hopkins extended the analysis of sensory representation by recording from single cells. Mountcastle found that individual neurons in the somatosensory cortex respond to signals from only a limited area of the skin, an area he called the receptive field of the neuron. For example, a cell in the hand region of the somatosensory cortex of the left hemisphere might respond only to stimulation of the tip of the middle fmger of the right hand and to nothing else.

Mountcastle also discovered that tactile sensation is made up of several distinct submodalities; for example, touch includes the sensation produced by hard pressure on the skin as well as that produced by a light brush against it. He found that each distinct submodality has its own private pathway within the brain and that this segregation is maintained at each relay in the brain stem and in the thalamus. The most fascinating example of this segregation is evident in the somatosensory cortex, which is organized into columns of nerve cells extending from its upper to its lower surface. Each column is dedicated to one submodality and one area of skin. Thus all the cells in one column might receive information on superficial touch from the end of the index finger. Cells in another column might receive information on deep pressure from the index finger. Mountcastle's work revealed the extent to which the sensory message about touch is deconstructed; each submodality is analyzed separately and reconstructed and combined only

in later stages of information processing. Mountcastle also proposed the now generally accepted idea that these columns form the basic information-processing modules of the cortex.

Other sensory modalities are organized similarly. The analysis of perception is more advanced in vision than in any other sense. Here we see that visual information, relayed from one point to another along the pathway from the retina to the cerebral cortex, is also transformed in precise ways, first being deconstructed and then reconstructed-all without our being in any way aware of it.

In the early 1950s, Stephen Kufiler recorded from single cells in the retina and made the srupirsing discovery that those cells do not signal absolute levels of light; rather, they signal the contrast between light and dark. He found that the most effective stimulus for exciting retinal cells is not diffuse light but small spits of light. David Hubel and Torsten Wiesel found a similar principle operating in the next relay stage, located in the thalamus. However, they made the astonishing discovery that once the signal reaches the cortex, it is transformed. Most cells in the cortex do not respond vigorously to small spots of light. Instead, they respond to linear contours, to elongated edges between lighter and darker areas, such as those that delineate objects in our environment.

Most amazingly, each cell in the primary visual cortex responds only to a specific orientation of such light-dark contours. Thus if a square block is rotated slowly before our eyes, slowly changing the angle of each edge, different cells will fire in response to these different angles. Some cells respond best when the linear edge is orientated vertically, others when the edge is horizontal, and still other cells when the axis is at an oblique angle. Deconstructing visual objects into line segments of different orientation appears to be the initial step in encoding the forms of objects in our environment. Hubel and Wiesel next found that in the visual system, as in the somatosensory system, cells with similar properties (in this case, cells with similar axes of orientation) are grouped together in columns.

I found this work enthralling, As a scientific contribution to brain science, it stands as the most fundamental advance in our understanding of the organization of the cerebral cortex since the work of Cajal at the turn of the last century. Cajal revealed the precision of the interconnections between populations of individual nerve cells. Mountcaslte, Hubel, and Wiesel revealed the functional significance of those patterns of interconnections. They showed that the connections filter and transform sensory information on the way to and within the cortex, and that the cortex is organized into functional compartments, or modules.

As a result of the work of Mountcastle, Hubel and Wiesel, we can begin to discern the principles of cognitive psychology on the cellular level. These scientists confirmed the inferences of the Gestalt psychologists by showing us that the belief that our perceptions are precise and direct is an illusion-a perceptual illusion. The brain does not simply take the raw data that it receives through the senses and reproduce it faithfully. Instead, each sensory system first analyzes and deconstructs, then restructures the raw; incoming information according to its own built-in connections and rule—shades of Immanuel Kant!

The sensory systems are hypothesis generators. We confront the world neither directly nor precisely, but as Mountcastle pointed out:

. . . from a

brain linked to what is "out there" by a few million fragile sensory

nerve

fibers, our only information

channels, our lifelines to reality. They provide also what

is essential for life itself: an

afferent excitation that maintains the conscious state, the

awareness of self.

Sensations are set by the encoding

functions of sensory nerve endings, and by the

integrating neural mechanics of the

central nervous system. Afferent nerve fibers are

not high fidelity recorders, for

they accentuate certain stimulus features, neglect

others. The central neuron is a

story-teller with regard to the nerve fibers, and it is

never completely trustworthy,

allowing distortions of quality and measure. . . .

Sensation

is an abstraction, not a replication, of the real world.

Subsequent work on the visual system showed that in addition to dissecting objects into linear segments, other aspects of visual perception-motion, depth, form, and color-are segregated from one another and conveyed in separate pathways to the brain, where they are brought together and coordinated into a unified perception. An important part of this segregation occurs in the primary visual area of the cortex, which gives rise to two parallel pathways. One pathway, the "what" pathway, carries information about the form of an object: what the object looks like. The other, the "where" pathway, carries information about the movement of the object in space: where the object is located. These two neural pathways enq in higher regions of the cortex that are concerned with more complex processing.

The idea that different aspects of visual perception might be handled in separate areas of the brain was predicted by Freud at the end of the nineteenth century, when he proposed that the inability of certain patients to recognize specific features of the visual world was due not to a sensory deficit (resulting from damage to the retina or the optic nerve), but to a cortical defect that affected their ability to combine aspects of vision into a meaningful pattern. These defects, which Freud called agnosias (loss of knowledge), can be quite specific. For example, there are specific defects caused by lesions in. either the "where" or the "what" pathway. A person with depth agnosia due to a defect in the "where" system is unable to perceive depth but has otherwise intact vision. One such person was unable "to appreciate depth or thickness of objects seen. . . . The most corpulent individual might be a moving cardboard figure; everything is perfectly flat." Similarly, persons with motion agnosia are unable to perceive motion, but all other perceptual abilities are normal.

Striking evidence indicates that a discrete region of the "what" pathway is specialized for face recognition. Following a stroke, some people can recognize a face as a face, the parts of the face, and even specific emotions expressed on the face but are unable to identify the face as belonging to a particular person. People with this disability (prosopagnosia) often cannot recognize close relatives or even their own face in the mirror. They have not lost the ability to recognize a person's identity, they have lost the connection between a face and an identity. To recognize a close friend or relative, these patients must rely on the person's voice or other non-visual clues. In his classic essay "The Man Who Mistook His Wife for a Hat," the gifted neurologist\neuropsychologist Oliver Sacks describes a patient with prosopagnosia who failed to recognize his wife sitting next to him and, thinking she was his hat, tried to pick her up and put her on his head as he was about to leave Sacks's office.

How is information about motion, depth, color, and form,

which is carried by separate neural pathways, organized into a cohesive

perception? This problem, called the binding problem, is related to the unity

of conscious experience: that is, to how we see a boy riding a bicycle not by

seeing movement without an image or an image that is stationary, but by seeing

in full color a coherent, three-dimensional, moving version of the boy. The

binding problem is thought to be resolved by bringing into association

temporarily several independent neural pathways with discrete functions. How

and where does this binding occur? Semir Zeki, a leading student of visual perception at

At first glance,

the problem of integration may seem quite simple. Logically it

demands nothing more than that all

the signals £rom the specialized visual areas be

brought together, to 'report' the

results of their operations to a single master cortical

area. This master area would then

synthesize the information coming £rom all these

diverse sources and provide us with

the final image, or so one might think. But the

brain has its own logic. . . . If

all the visual areas report to a single master cortical area,

who or what does that single area

report to? Put more visually, who is 'looking' at the

visual image provided by that master

area? The problem is not unique to the visual

image or the visual cortex. Who, for

example, listens to the music provided by a

master auditory area, or senses the odour provided by the master olfactory cortex? It is

in fact pointless pursuing this

grand design. For here one comes across an important

anatomical fact, which may be less

grand but perhaps more illuminating in the end:

there is no single cortical area to

which all other cortical areas report exclusively,

either in the visual or in any other

system. In sum, the cortex must be using a

different strategy for generating

the integrated visual image.

When a cognitive neuroscientist peers down at the brain of an experimental animal, the scientist can see which cells are firing and can read out and understand what the brain is perceiving. But what strategy does the brain use to read itself out? This question, which is central to the unitary nature of conscious experience, remains one of the many unresolved mysteries of the new science of mind.

An initial approach was developed by Ed Evarts, Robert Wurtz, and Michael Goldberg at NIH. They pioneered methods for recording the activity of single nerve cells in the brains of intact, behaving monkeys focusing on cognitive tasks that require action and attention. Their new research techniques enabled investigators such as Anthony Movshon at NYU and William Newsome at Stanford to correlate the action of individual brain cells with complex behavior-that is, with perception and action-and to see the effects on perception and action of stimulating or reducing activity in small groups of cells.

These studies also made it possible to examine how the firing of individual nerve cells involved in perceptual and motor processing is modified by attention and decision making. Thus unlike behaviorism, which focused only on the behavior stemming from an animal's response to a stimulus, or cognitive psychology, which focused on the abstract notion of an internal representation, the merger of cognitive psychology and cellular neurobiology revealed an actual physical representation-an information-processing capability in the brain-that leads to a behavior. This work demonstrated that the unconscious inference described by Helmholtz in 1860, the unconscious information processing that intervenes between a stimulus and a response; could also be studied on the cellular level.

The cellular studies of internal representation in the cerebral cortex; of the sensory and motor world were extended in the 1980s with the introduction of brain imaging. These techniques, such as positron emission tomography (PET) and functional magnetic resonance imaging (fMRI), carried the work of Paul Broca, Carl Wernicke, Sigmund Freud, the British neurologist John Hughlings Jackson, and Oliver Sacks a giant step forward by revealing the locale in the brain of a variety of complex behavioral functions. With these new technologies, investigators could look into the brain and see not simply single cells, but also neural circuits in action.

I had become convinced that the key to understanding the molecular mechanisms of spatial memory was understanding how space is represented in the hippocampus. As one might expect because of its importance in explicit memory, the spatial memory of environments has a prominent internal representation in the hippocampus. This is evident even anatomically. Birds in which spatial memory is particularly important-those that store food at a large number of sites, for example-have a larger hippocampus than other birds.

To address these questions, I brought the tools and insights of molecular biology to bear on existing studies of the internal representation of space in mice. We had begun by using genetically modified mice to study the effect of specific genes on long-term potentiation in the hippocampus and on explicit memory of space. We were now ready to ask how long-term potentiation helps stabilize the internal representation of space and how attention, a defining feature of explicit memory storage, modulates the representation of space. This compound approach extending from molecules to mind-opened up the possibility of a molecular biology of cognition and attention and completed the outlines of a synthesis that led to a new science of mind.